Fresh off of its 20th birthday, the search engine blocking ‘Robots.txt’ directive is back in the news. This week Google announced an update to their robots.txt testing tool in Webmaster Tools. This particular update is one the Search World is excited about, because it makes our lives so much simpler.

Robots.what?? And how does my site have robots on it?? If you aren’t ingrained in digital marketing and aren’t even sure what a robots.txt file is – that’s ok, we are here to help.

What is a Robots.txt file?

The robots.txt file is a file hosted on your domain and tells the crawler bots where things are located on your website, as well as what parts of the site shouldn’t be crawled and indexed – this could be content behind a login, the backend of your site, secure pages, etc etc.

To make this easy to visualize, you are an ecommerce site. You obviously have unique user accounts for each one of your customers. Those are typically displayed in a directory similar to mysite.com/myaccount/(unique user id). This particular URL is unique to each and every one of your customers. This isn’t something that would make the most sense to have included in Google’s index, because, the URL is unique to just one person. Google is looking to index pages that can be beneficial to many. Having unnecessary URLs indexed that receive very low traffic (remember, it is only beneficial to one person) are doing you no good and can harm your overall rankings. So, the Robots.txt file can blog the crawlers from indexing the mysite.com/myaccount/ directory. This keeps your indexed pages very lean and preventing index bloat.

What does the Robots.txt testing tool do?

The testing tool tests your robots.txt file for any errors and warnings that might be blocking crawls – which could potentially lead to lower rankings if a search bot cannot access certain areas of your website. Best part, is that you can even make edits to your Robots.txt file right in the tool. This is great for digital marketers because they do not have to contact the design team to make a small edit to the Robots.txt file.

What about this update?

The new update does a couple new things:

- You can test changes to your robots.txt file before you make it live.

- You can see older versions of the file and compare past and present issues.

- You can easily file problems in the file because this tool will highlight which line in the file is causing a problem!

- You can test specific URLs on your website to see if the Googlebot is “allowed.”

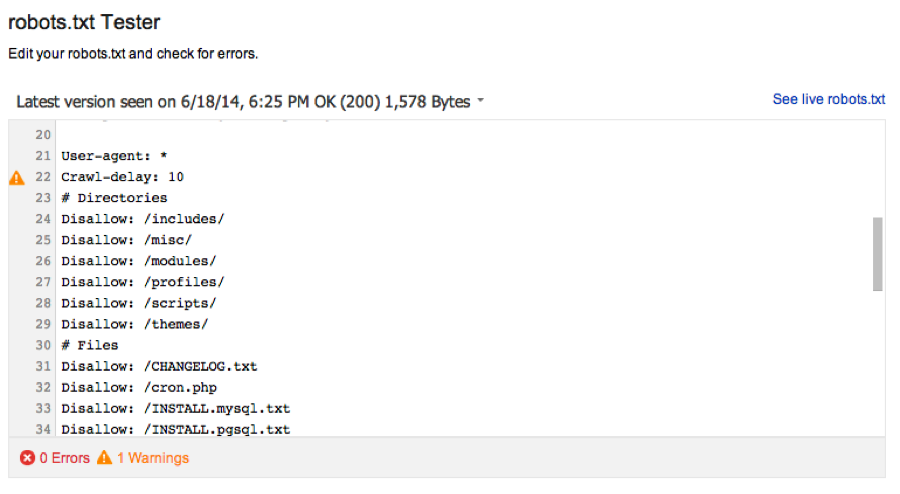

Here is a screenshot of a robots.txt file that has one warning. You can see that the little orange triangle is next to the part of the file that is causing the issue. This helps you to quickly glance through the file, identify the issue, and solve it.

The Robots.txt file was always a small, but impactful directive each digital marketer needed to have in their toolbox. This update just gives internet marketers another reason to be spending a great deal of time in Webmaster Tools. If you are not comfortable and spending a great deal of time in Webmaster Tools, then we have another discussion to have. We have covered all kinds of new features in Webmaster Tools before, so feel free to look around our blog for more great information.

The Robots.txt file was always a small, but impactful directive each digital marketer needed to have in their toolbox. This update just gives internet marketers another reason to be spending a great deal of time in Webmaster Tools. If you are not comfortable and spending a great deal of time in Webmaster Tools, then we have another discussion to have. We have covered all kinds of new features in Webmaster Tools before, so feel free to look around our blog for more great information.